Storage Spaces (2025)

Overview

Teaching: 30 min

Exercises: 15 minQuestions

What are the types and roles of DUNE’s data volumes?

What are the commands and tools to handle data?

Objectives

Understanding the data volumes and their properties

Displaying volume information (total size, available size, mount point, device location)

Differentiating the commands to handle data between grid accessible and interactive volumes

Table of Contents for 02-storage-spaces

- This is an updated version of the 2023 training - Live Notes

- Introduction

- Vocabulary

- Interactive storage volumes (mounted on dunegpvmXX.fnal.gov or lxplus.cern.ch)

- Summary on storage spaces

- Monitoring and Usage

- Commands and tools

- Quiz

- Useful links to bookmark

This is an updated version of the 2023 training

Workshop Storage Spaces Video from December 2024

Introduction

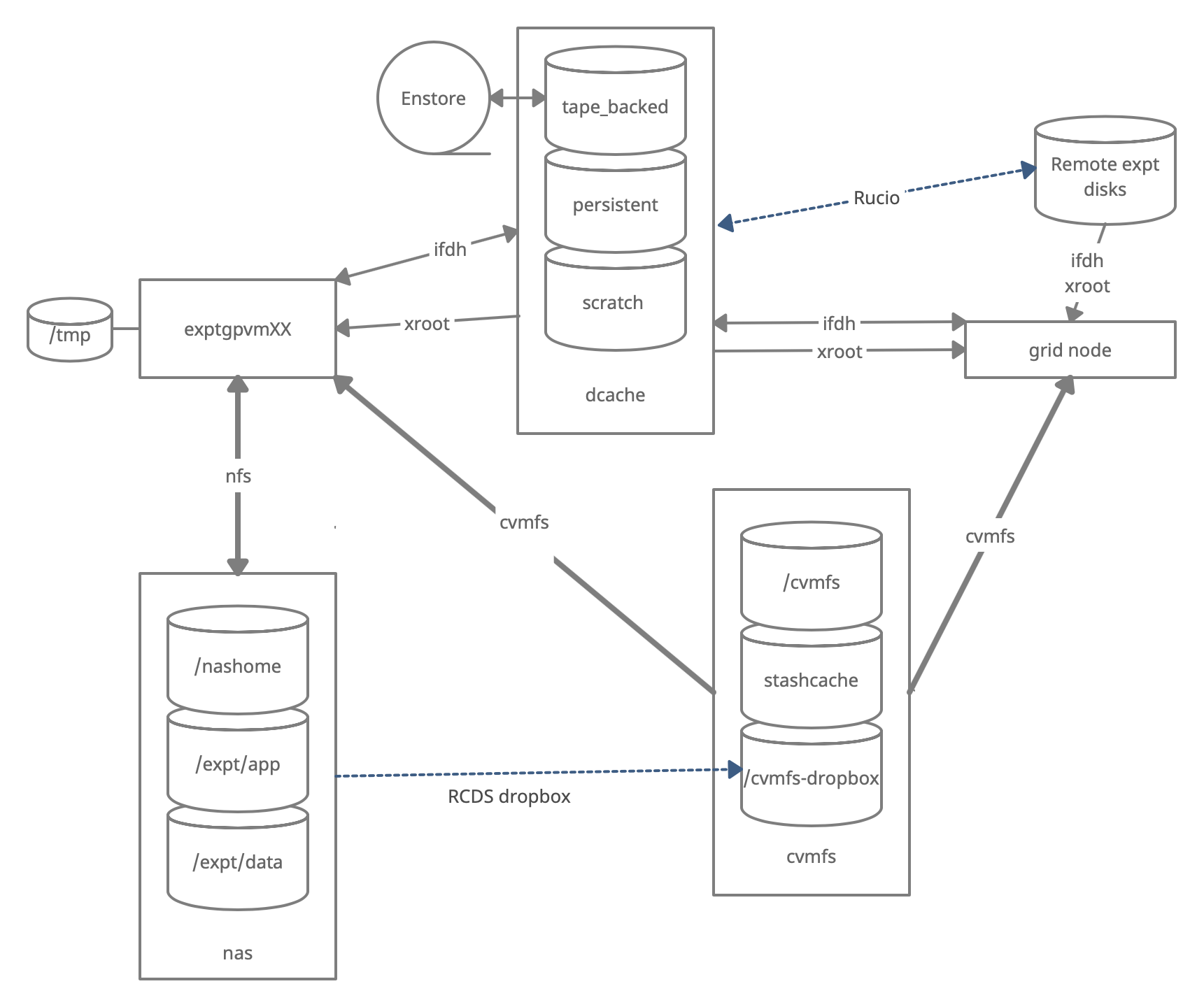

There are five types of storage volumes that you will encounter at Fermilab (or CERN):

- local hard drives

- network attached storage

- large-scale, distributed storage

- Rucio Storage Elements (RSE’s) (a specific type of large-scale, distributed storage)

- CERN Virtual Machine File System (CVMFS)

Each has its own advantages and limitations, and knowing which one to use when isn’t all straightforward or obvious. But with some amount of foresight, you can avoid some of the common pitfalls that have caught out other users.

Vocabulary

What is POSIX? A volume with POSIX access (Portable Operating System Interface Wikipedia) allow users to directly read, write and modify using standard commands, e.g. using bash scripts, fopen(). In general, volumes mounted directly into the operating system.

What is meant by ‘grid accessible’? Volumes that are grid accessible require specific tool suites to handle data stored there. Grid access to a volume is NOT POSIX access. This will be explained in the following sections.

What is immutable? A file that is immutable means that once it is written to the volume it cannot be modified. It can only be read, moved, or deleted. This property is in general a restriction imposed by the storage volume on which the file is stored. Not a good choice for code or other files you want to change.

Interactive storage volumes (mounted on dunegpvmXX.fnal.gov or lxplus.cern.ch)

Your home area

Your home area is similar to the user’s local hard drive but network mounted

- access speed to the volume very high, on top of full POSIX access

- network volumes are NOT safe to store certificates and tickets

- important: users have a single home area at FNAL used for all experiments

- not accessible from grid worker nodes

- not for code developement (home area is 5 GB)

at Fermilab

- you need a valid Kerberos ticket in order to access files in your Home area

- periodic snapshots are taken so you can recover deleted files. (/nashome/.snapshot)

- permissions are set so your collaborators cannot see files in your home area

- can find quota with command

quota -u -m -sat CERN

- CERN uses AFS for your home area

- AFS info from CERN

- get quota via the command

fs listquotaNote: your home area is small and private

You want to use your home area for things that only you should see. If you want to share files with collaborators you need to put them in the /app/ or /data/ areas described below.

Locally mounted volumes

local volumes are physical disks, mounted directly on the computer

- physically inside the computer node you are remotely accessing

- mounted on the machine through the motherboard (not over network)

- used as temporary storage for infrastructure services (e.g. /var, /tmp,)

- can be used to store certificates and tickets. (These are saved there automatically with owner-read enabled and other permissions disabled.)

- usually very small and should not be used to store data files or for code development

- files on these volumes are not backed up

Network Attached Storage (NAS)

NAS elements behaves similar to a locally mounted volume.

- functions similar to services such as Dropbox or OneDrive

- fast and stable POSIX access to these volumes

- volumes available only on a limited number of computers or servers

- not available on grid computing (FermiGrid, Open Science Grid, WLCG, HPC, etc.)

At Fermilab

- /exp/dune/app/users/….

has periodic snapshots in /exp/dune/app/.... /.snap, but /exp/dune/data does NOT - easy to share files with colleagues using /exp/dune/data and /exp/dune/app

- See the Ceph documentation for details on those systems.

At CERN

At CERN the analog is EOS See EOS for information about using EOS

Grid-accessible storage volumes

The following areas are grid accessible via methods such as xrdcp/xrootd and ifdh. You can read files in dCache across DUNE if you have the appropriate authorization. Writing files may require special permissions.

- At Fermilab, an instance of dCache+CTA is used for large-scale, distributed storage with capacity for more than 100 PB of storage and O(10000) connections.

- At CERN, the analog is EOS+CASTOR

At Fermilab (CTA) and CERN (CASTOR), files are backed up to tape and may not be immediately accessible.

DUNE also maintains disk copies of most recent files across many sites worldwide.

Whenever possible, these storage elements should be accessed over xrootd (see next section) as the mount points on interactive nodes are slow, unstable, and can cause the node to become unusable. Here are the different dCache volumes:

Persistent dCache

/pnfs/dune/persistent/ is “persistent” storage. If a file is in persistent dCache, the data in the file is actively available for reads at any time and will not be removed until manually deleted by user. The persistent dCache contains 3 logical areas: (1) /pnfs/dune/persistent/users in which every user has a quota up to 5TB total (2) /pnfs/dune/persistent/physicsgroups. This is dedicated for DUNE Physics groups and managed by the respective physics conveners of those physics groups.

https://wiki.dunescience.org/wiki/DUNE_Computing/Using_the_Physics_Groups_Persistent_Space_at_Fermilab gives more details on how to get access to these groups. In general, if you need to store more than 5TB in persistent dCache you should be working with the Physics Groups areas. (3) the “staging” area /pnfs/dune/persistent/staging which is not accessible by regular users but is by far the largest of the three. It is used for official datasets.

Scratch dCache

/pfns/dune/scratch is a large volume shared across all experiments. When a new file is written to scratch space, old files are removed in order to make room for the newer file. Removal is based on Least Recently Utilized (LRU) policy, and performed by an automated daemon.

Tape-backed dCache

Tape-backed disk based storage areas that have their contents mirrored to permanent storage on CTA tape.

Files are not available for immediate read on disk, but needs to be ‘staged’ from tape first (see video of a tape storage robot).

Rucio Storage Elements

Rucio Storage Elements (or RSEs) are storage elements provided by collaborating institution for official DUNE datasets. Data stored in DUNE RSE’s must be fully cataloged in the metacat catalog and is managed by the DUNE data management team. This is where you find the official data samples.

See the data management lesson for much more information about using the rucio system to find official data.

CVMFS

CVMFS is the CERN Virtual Machine File System is a centrally managed storage area that is distributed over the network, and utilized to distribute common software and a limited set of reference files. CVMFS is mounted over the network, and can be utilized on grid nodes, interactive nodes, and personal desktops/laptops. It is read only, and the most common source for centrally maintained versions of experiment software libraries/executables. CVMFS is mounted at /cvmfs/ and access is POSIX-like, but read only.

See CVMFS for more information.

What is my quota?

We use multiple systems so there are multiple ways for checking your disk quota.

Your home area at FNAL

quota -u -m -s

Your home area at CERN

fs listquota

The /app/ and /data/ areas at FNAL

These use the Ceph file system which has directory quotas instead of user quotas. See the quota section of: https://fifewiki.fnal.gov/wiki/Ceph#Quotas

The most useful commands for general users are

getfattr -n ceph.quota.max_bytes /exp/dune/app/users/$USER

getfattr -n ceph.quota.max_bytes /exp/dune/data/users/$USER

EOS at CERN

export EOS_MGM_URL=root://eosuser.cern.ch

eos quota

Fermilab dCache

Go to https://fndca.fnal.gov/cgi-bin/quota.py - you need to be on the Fermilab VPN - otherwise it sits there not loading.

Note - When reading from dcache always use the root: syntax, not direct /pnfs

The Fermilab dcache areas have NFS mounts. These are for your convenience, they allow you to look at the directory structure and, for example, remove files. However, NFS access is slow, inconsistent, and can hang the machine if I/O heavy processes use it. Always use the

xroot root://<site>… when reading/accessing files instead of/pnfs/directly. Once you have your dune environment set up thepnfs2xrootdcommand can do the conversion toroot:format for you (only for files at FNAL for now).

Summary on storage spaces

Full documentation: Understanding Storage Volumes

| Quota/Space | Retention Policy | Tape Backed? | Retention Lifetime on disk | Use for | Path | Grid Accessible | |

|---|---|---|---|---|---|---|---|

| Persistent dCache | Yes(5)/~400 TB/exp | Managed by User/Exp | No | Until manually deleted | immutable files w/ long lifetime | /pnfs/dune/persistent/users | Yes |

| Persistent PhysGrp | Yes(50)/~500 TB/exp | Managed by PhysGrp | No | Until manually deleted | immutable files w/ long lifetime | /pnfs/dune/persistent/physicsgroups | Yes |

| Scratch dCache | No/no limit | LRU eviction - least recently used file deleted | No | Varies, ~30 days (NOT guaranteed) | immutable files w/ short lifetime | /pnfs/dune/scratch | Yes |

| Tape backed | dCache No/O(40) PB | LRU eviction (from disk) | Yes | Approx 30 days | Long-term archive | /pnfs/dune/… | Yes |

| NAS Data | Yes (~1 TB)/ 62 TB total | Managed by Experiment | No | Until manually deleted | Storing final analysis samples | /exp/dune/data | No |

| NAS App | Yes (~100 GB)/ ~50 TB total | Managed by Experiment | No | Until manually deleted | Storing and compiling software | /exp/dune/app | No |

| Home Area (NFS mount) | Yes (~10 GB) | Centrally Managed by CCD | No | Until manually deleted | Storing global environment scripts (All FNAL Exp) | /nashome/<letter>/<uid> | No |

| Rucio | 25 PB | Centrally Managed by DUNE | Yes | Each file has retention policy | Official DUNE Data samples | use rucio/justIN to access | Yes |

Monitoring and Usage

Remember that these volumes are not infinite, and monitoring your and the experiment’s usage of these volumes is important to smooth access to data and simulation samples. To see your persistent usage visit here (bottom left):

And to see the total volume usage at Rucio Storage Elements around the world:

Resource DUNE Rucio Storage

Note - do not blindly copy files from personal machines to DUNE systems.

You may have files on your personal machine that contain personal information, licensed software or (god forbid) malware or pornography. Do not transfer any files from your personal machine to DUNE machines unless they are directly related to work on DUNE. You must be fully aware of any file’s contents. We have seen it all and we do not want to.

Commands and tools

This section will teach you the main tools and commands to display storage information and access data.

ifdh

Another useful data handling command you will soon come across is ifdh. This stands for Intensity Frontier Data Handling. It is a tool suite that facilitates selecting the appropriate data transfer method from many possibilities while protecting shared resources from overload. You may see ifdhc, where c refers to client.

Note

ifdhis much more efficient than NFS file access. Please use it and/orxrdcp/xrootdwhen accessing remote files.

Here is an example to copy a file. Refer to the Mission Setup for the setting up the DUNELAR_VERSION.

Note

For now do this in the Apptainer

Do the standard sl7 setup

once you are set up

export IFDH_TOKEN_ENABLE=1 # only need to do this once

ifdh cp root://fndcadoor.fnal.gov:1094/pnfs/fnal.gov/usr/dune/tape_backed/dunepro/physics/full-reconstructed/2023/mc/out1/MC_Winter2023_RITM1592444_reReco/54/05/35/65/NNBarAtm_hA_BR_dune10kt_1x2x6_54053565_607_20220331T192335Z_gen_g4_detsim_reco_65751406_0_20230125T150414Z_reReco.root /dev/null

This should go quickly as you are not actually writing the file.

Note, if the destination for an ifdh cp command is a directory instead of filename with full path, you have to add the “-D” option to the command line.

Prior to attempting the first exercise, please take a look at the full list of IFDH commands, to be able to complete the exercise. In particular, cp, rmdir,

Resource: ifdh commands

Exercise 1

use normal

mkdirto create a directory in your dCache scratch area (/pnfs/dune/scratch/users/${USER}/) called “DUNE_tutorial_2025” Using the `ifdh command, complete the following tasks:

- copy /exp/dune/app/users/${USER}/my_first_login.txt file to that directory

- copy the my_first_login.txt file from your dCache scratch directory (i.e. DUNE_tutorial_2024) to /dev/null

- remove the directory DUNE_tutorial_2025

- create the directory DUNE_tutorial_2025_data_file Note, if the destination for an ifdh cp command is a directory instead of filename with full path, you have to add the “-D” option to the command line. Also, for a directory to be deleted, it must be empty.

Note

ifdhno longer has amkdircommand as it auto-creates directories. In this example, we use the NFS commandmkdirdirectly for clarity.Answer

mkdir /pnfs/dune/scratch/users/${USER}/DUNE_tutorial_2025 ifdh cp -D /exp/dune/app/users/${USER}/my_first_login.txt /pnfs/dune/scratch/users/${USER}/DUNE_tutorial_2025 ifdh cp /pnfs/dune/scratch/users/${USER}/DUNE_tutorial_2025/my_first_login.txt /dev/null ifdh rm /pnfs/dune/scratch/users/${USER}/DUNE_tutorial_2025/my_first_login.txt ifdh rmdir /pnfs/dune/scratch/users/${USER}/DUNE_tutorial_2025 ifdh mkdir /pnfs/dune/scratch/users/${USER}/DUNE_tutorial_2025_data_file

xrootd

The eXtended ROOT daemon is a software framework designed for accessing data from various architectures in a complete scalable way (in size and performance).

XRootD is most suitable for read-only data access. XRootD Man pages

Issue the following command. Please look at the input and output of the command, and recognize that this is a listing of /pnfs/dune/scratch/users/${USER}/DUNE_tutorial_2024. Try and understand how the translation between a NFS path and an xrootd URI could be done by hand if you needed to do so.

xrdfs root://fndca1.fnal.gov:1094/ ls /pnfs/fnal.gov/usr/dune/scratch/users/${USER}/

Note that you can do

lar -c <input.fcl> <xrootd_uri>

to stream into a larsoft module configured within the fhicl file. As well, it can be implemented in standalone C++ as

TFile * thefile = TFile::Open(<xrootd_uri>)

or PyROOT code as

thefile = ROOT.TFile.Open(<xrootd_uri>)

What is the right xroot path for a file.

If a file is in /pnfs/dune/tape_backed/dunepro/protodune-sp/reco-recalibrated/2021/detector/physics/PDSPProd4/00/00/51/41/np04_raw_run005141_0003_dl9_reco1_18127219_0_20210318T104440Z_reco2_51835174_0_20211231T143346Z.root

the command

pnfs2xrootd /pnfs/dune/tape_backed/dunepro/protodune-sp/reco-recalibrated/2021/detector/physics/PDSPProd4/00/00/51/41/np04_raw_run005141_0003_dl9_reco1_18127219_0_20210318T104440Z_reco2_51835174_0_20211231T143346Z.root

will return the correct xrootd uri:

root://fndca1.fnal.gov:1094//pnfs/fnal.gov/usr/dune/tape_backed/dunepro/protodune-sp/reco-recalibrated/2021/detector/physics/PDSPProd4/00/00/51/41/np04_raw_run005141_0003_dl9_reco1_18127219_0_20210318T104440Z_reco2_51835174_0_20211231T143346Z.root

Note - if you don’t have pfns2xrootd on your system

Copy this to your local area, make it executable and use it instead.

you can then

root -l <that long root: path>

to open the root file.

This even works if the file is in Europe - which you cannot do with a direct /pnfs! (NOTE! not all storage elements accept tokens so this may not work for all files)

#Need to setup root executable in the environment first...

export DUNELAR_VERSION=v10_07_00d00

export DUNELAR_QUALIFIER=e26:prof

export UPS_OVERRIDE="-H Linux64bit+3.10-2.17"

source /cvmfs/dune.opensciencegrid.org/products/dune/setup_dune.sh

setup dunesw $DUNELAR_VERSION -q $DUNELAR_QUALIFIER

setup justin # use justin to get appropriate tokens

justin time # this will ask you to authenticate via web browser

justin get-token # this actually gets you a token

root -l root://meitner.tier2.hep.manchester.ac.uk:1094//cephfs/experiments/dune/RSE/fardet-vd/fd/a6/prodmarley_nue_es_flat_radiological_decay0_dunevd10kt_1x8x14_3view_30deg_20250217T033222Z_gen_004122_supernova_g4stage1_g4stage2_detsim_reco.root

See the next episode on data management for instructions on finding files worldwide.

Note Files in /tape_backed/ may not be immediately accessible, those in /persistent/ and /scratch/ are.

Is my file available or stuck on tape?

files in /tape_backed/ storage at Fermilab are migrated to tape and may not be on disk? You can check this by doing the following in an AL9 window

gfal-xattr <xrootpath> user.statusif it is on disk you get

ONLINEif it is only on tape you get

NEARLINEor ‘UNKNOWN’ (This command doesn’t always work on SL7 so use an AL9 window)

The df command

To find out what types of volumes are available on a node can be achieved with the command df. The -h is for human readable format. It will list a lot of information about each volume (total size, available size, mount point, device location).

df -h

Exercise 3

From the output of the

df -hcommand, identify:

- the home area

- the NAS storage spaces

- the different dCache volumes

Quiz

Question 01

Which volumes are directly accessible (POSIX) from grid worker nodes?

- /exp/dune/data

- DUNE CVMFS repository

- /pnfs/dune/scratch

- /pnfs/dune/persistent

- None of the Above

Answer

The correct answer is B - DUNE CVMFS repository.

Question 02

Which data volume is the best location for the output of an analysis-user grid job?

- dCache scratch (/pnfs/dune/scratch/users/${USER}/)

- dCache persistent (/pnfs/dune/persistent/users/${USER}/)

- CTA tape (/pnfs/dune/tape_backed/users/${USER}/)

- user’s home area (`~${USER}`)

- CEPH data volume (/exp/dune/data or /exp/dune/app)

Answer

The correct answer is A, dCache scratch (/pnfs/dune/scratch/users/${USER}/).

Question 03

You have written a shell script that sets up your environment for both DUNE and another FNAL experiment. Where should you put it?

- DUNE CVMFS repository

- /pnfs/dune/scratch/

- /exp/dune/app/

- Your GPVM home area

- Your laptop home area

Answer

The correct answer is D - Your GPVM home area.

Question 04

What is the preferred way of reading a file interactively?

- Read it across the nfs mount on the GPVM

- Download the whole file to /tmp with xrdcp

- Open it for streaming via xrootd

- None of the above

Answer

The correct answer is C - Open it for streaming via xrootd. Use

pnfs2xrootdto generate the streaming path.Comment here

Useful links to bookmark

- ifdh commands (redmine)

- Understanding storage volumes (redmine)

- How DUNE storage works: pdf

Key Points

Home directories are centrally managed by Computing Division and meant to store setup scripts, do NOT store certificates here.

Network attached storage (NAS) /exp/dune/app is primarily for code development.

The NAS /exp/dune/data is for store ntuples and small datasets.

dCache volumes (tape, resilient, scratch, persistent) offer large storage with various retention lifetime.

The tool suites idfh and XRootD allow for accessing data with appropriate transfer method and in a scalable way.